It's about time I took part in a game jam but this one really intrigued me. I think it's because I tend to work on the developer tools these days rather than the game itself; I find it more interesting. Therefore, this game jam caught my eye.

I've always been fascinated with random generators. The kind from the early internet days like Seventh Sanctum. I've long wondered how these worked and I've created some simple generators using the basic idea over on my playground using HTML, CSS and *shudder* JavaScript. I thought this would be the perfect jam though to do some research and really dig into how random generators used to be made convincingly and do it using my favourite C++ and SFML combo.

Warning, this is a long post.

Research - Ask ChatGPT

First off, I had a great conversation with ChatGPT about how those sorts of generators were made and what kind of algorithms they used. It started off explaining 3 ways to implement my game jam idea:

Grammar-based using rules and templates

Basically recursive string placement and you supply a whole load of word types.

The [adjective] [noun] of [proper-noun or abstract-concept]

Mad-libs approach

A more basic version of the grammar based approach, where different types of sentences are supplied.

Long ago, in the time of the #adjective# #noun#, the #faction# waged war against the #enemy#

Layered Abstraction

Basically structured nonsense. Here, the word types are already built up from other approaches to create something that sounds more AI generated.

[Proper Noun] was last wielded by the [Faction], a cult that believes [Purpose].

I found this really interesting, especially as the word types like [adjective] and [Purpose] could easily equate to an enum. I could create a list of enum word types, store everything in text documents and then "build" sentences from these word types. Add in some logic using rules and templates, like adjectives must always be before a noun, and I could quickly create something quite random.

Markov Chains

I then asked if all this data was really just stored in text files and it said yes, but ChatGPT always says yes. But in its explanation it started talking about Markov Chains. This is the kind of computer science mumbo jumbo I was hoping to get from GPT. I went off and researched Markov Chains and it turns out they are basically a way to generate sequences of stuff. You seed them, and the next item in the sequence is based off the item before it but they don't have full context over the entire sequence. Kind of like ChatGPT itself.

Markov Chains are statistical mimicry. They have no concept of grammar templates or meaning. In essence you could say they are the spiritual ancestor of LLM's.

Armed with this knowledge, I then started wondering if you could give Markov Chains more context. ChatGPT informed me that my best bet would be to implement Conditional Markov Chains mixed with Probabilistic Grammar and Weights. This involves different Markov models like religiousMarkov or plantMarkov mixed with assigned weighted probabilities. So if a weapon name is "Cursed", you're more likely to get a backstory involving betrayal or a wielder with a tragic fate over a backstory where the goddess bestowed it due to kindness.

V1 - Basic C++ Lore Generator

Armed with some fun research, I then went off and made a basic console version of what I wanted. To start off I made a simple enum of word types, created a map of word types to strings. Then 'built' sentences using those word types and created a random sentence by filling in the word type with one of those strings:

All good stuff.

V2 - Basic C++ Lore Generator

I expanded slightly on this version and moved over to using text files so I could easily get GPT to generate a load of words or phrases for me that I could add to the files. I added a way to load those in to be used instead of hardcoding it in main.

V3 - Creating a Basic Markov Chain Model

This is where shit got fun. With the help of ChatGPT, I got a very simple Markov generator going that takes in a text file of words and then "trains" itself on that data set. Then, in the place where it gets a random word from the "word pool" of that specific type, it would request a word from the generator instead. These words were actually plausible and looked 'right'.

You can see here my full list of names I created and the name the generator created. I also cleaned up the code at this point and moved everything out into seperate classes as it was getting messy in main and I hate having global variables and static classes just hanging around.

At this point, it kind of felt like I had created baby's first LLM and I was very happy. It then occurred to me that I could then chain the markov chains together to create the initial lore template itself, essentially creating a fully generative 'lore engine' so to speak.

I put this to ChatGPT and it started talking about word tokens instead of using individual characters and it was like a lightbulb went off in my head. ChatGPT itself works on tokens instead of characters (you've probably seen this from the infamous 'how many r's are there in strawberry'). I would now be recreating this, albeit in a very basic and crude manner.

V4 - Markov Based Word Chain Generator

First, I turned my initial Markov generator class into a pure virtual class, and created MarkovWordGenerator which inherited from it. The Markov Word Chain generator still needs an order and train/generate functions but they need to be slightly different. This would then allow me to have Word generators and Sentence Generators.

This did work...somewhat. The markov model was too accurate given my limited data set I was feeding it. This meant that it always returned the exact sentence as found in the training text file. Giving it an order of 1, gave the model less context but the sentences produced lacked context and so the sentences were jumbled and didn't make sense (in English anyway).

I also wanted more control over the types of sentences created. So I ended up making a text file that had sentence templates for item lore.

I then "trained" a markov model on a large text file that ChatGPT produced for me of item lore sentences. Then created functions that allow the user to just call "generateItemLore()", and it goes off, chooses a random item lore template, then replaces the bracketed sections with something from a word pool or in the case of {{sentence}}, replaces it with a markov-generated sentence.

I then added a way to have the user input a name for the item or generate one randomly:

Even with a larger "data set", I still have to set the model to the lowest setting (1). The sentences make more sense than previously but can still seem 'off'. It'll do though.

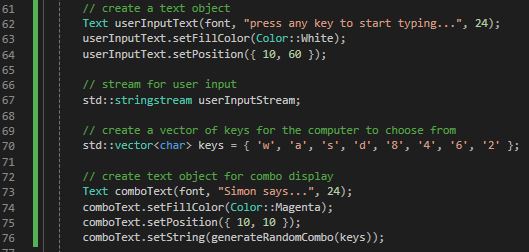

V5 - Creating the UI

Now with a simple item lore generator pretty much created, I wanted to move away from the console for a bit. I kept them as separate projects so I could continue working in the console one to prove out ideas then move it over to the UI version.

So I created a window, added a nice background image and some background music, then I got to creating the text boxes and remembered that SFML and text is not great. This is a program that is mainly reliant on entering text and displaying text. So I switched out SFML for FLTK. FLTK is a fantastic little library that is way more suited for this kind of task than SFML. The only issue is that I have my own wrapper around the library and I haven't turned that into a library yet so I had to pull every single file across.

Honestly, the thought of having to create a scrolling text box class in SFML just had me noping out within 5 seconds. FLTK was made for this kind of program.

The weekend then happened. Also it was sunny, which is rare for england, so I forgot about it until an hour before the jam closed. I considered not submitting but for once I was seeing a jam through. And I managed to submit with 11 minutes left to go:

Overall Thoughts

This was a lot of fun. Especially as I've come to understand how LLMs were initially created. There's is so much power in having a "locally trained" generator though. I'd be interested in making this do other things like generate documentation on code bases. But for the moment, I'd like to add a proper UI using a library and then expand it to creating lore for places and people. Also, allow more modding capabilities.